Table of Contents

Jump to a section

AWS bills can be scary. This is mostly because there's no spending cap. AWS also hides costs all over the place. You need to know where to look and what you're actually looking at.

I'll show you the key principles for keeping your AWS costs under control.

TL;DR - Quick Cost Optimization Tips

If you're not interested in the details, here's a quick list of tips to get you started:

- 🗑️ Clean up old storage: Unused EBS volumes and snapshots are easy to forget and quietly drain your budget. Delete them whenever you can.

- 🗄️ Turn off idle databases: RDS instances running for "just in case" reasons are a classic waste. Shut down what you're not actively using.

- 📏 Pick the right instance size: Oversized EC2s are a money pit. Downsize or switch to smaller types that actually fit your real workload.

- 🚀 Switch to Graviton where possible: These ARM-based instances are cheaper and often faster as long as your workload supports ARM binaries.

- ⚡ Use Spot Instances for batch or flexible jobs: If your workload can handle interruptions, Spot pricing is a massive discount.

- 💰 Commit to Reserved Instances or Savings Plans: For anything predictable, locking in a lower rate pays off quickly.

- 🔄 Set up S3 lifecycle rules: Let S3 automatically move or delete old data so you're not paying for stuff nobody touches.

- 🧠 Try S3 Intelligent-Tiering: AWS can move your files between storage classes based on access, saving you from overpaying.

- 🔍 Use Compute Optimizer: Let AWS tell you what's oversized or underused instead of guessing.

- 🏢 Consolidate accounts with AWS Organizations: Grouping accounts can unlock bigger discounts and makes billing less of a headache.

- 📈 Use Auto Scaling: Don't pay for idle servers at night or on weekends. Let AWS scale your compute up and down with demand.

- 🌍 Run batch jobs in cheaper regions: Some AWS regions are just less expensive. Move non-critical workloads there and pocket the difference.

- 🔌 Remove unused Elastic IPs: Elastic IPs cost money even when nothing's attached. Release them if you don't need them.

- 🌐 Cut data transfer costs with CloudFront: Serving content from edge locations can seriously reduce expensive data transfer fees.

- 📊 Track spending with Cost Explorer and Budgets: Set up alerts and dashboards so you catch surprises before they hit your bill.

- 🌉 Use VPC Gateway Endpoints: Skip NAT Gateway fees for S3 and DynamoDB by routing traffic through Gateway Endpoints.

- 🔐 ECR/Lambda Code Vulnerability Scanning: Use it thoughtfully, not on every build/push—scanning costs add up fast.

- 🎯 Identify unused NAT GWs & ALBs: Kubernetes creates lots of those—clean up the ones you don't need.

- 📈 Proper Auto-Scaling Rules: Use the right metrics to properly auto-scale based on demand, not just CPU/memory.

- 💾 EBS GP2 to GP3: Switch to GP3 volumes—much cheaper with better performance.

- ♻️ ECR Lifecycle Rules: Auto-delete unused images so you're not paying for every failed or test container version nobody uses.

- 🌙 Shutdown Rules for non-Prod environments: Stop instances when they're not needed, especially outside business hours.

- 📉 Sampling for Logs & Metrics: Reduce CloudWatch Ingest Costs by sampling instead of logging everything.

- 🕰️ Set CloudWatch Log Retention: Set to 30, 14, or even 7 days for dev environments to save on storage costs.

- 🏗️ Deregister Old AMIs: Deleting EC2 snapshots does not always delete the AMI associated with it, and vice versa.

- 🏞️ Refined Failover Setups: Does it make sense to run multiple regions with active-active? Switch to active-passive or Serverless-First approaches.

- 🏗️ Reduce Over-engineering: Domain-driven design is good, but don't overdo it—smart resource sharing can save serious money.

- 🌐 Switch Lambda to IPv6: Doesn't need expensive NAT Gateways anymore for connecting to the internet.

- ↔️ Zone-Affinity for Data Transfer: Cross-AZ and cross-region traffic costs money - collocate resources within the same AZ.

- 📝 Code Profiling: Optimizing memory usage or execution time in your application code can drastically lower compute bills.

- 💾 SQL Optimizations: Reduce load on databases—hard impact on non-optimized ORMs like Prisma, which happily ignore indexes.

- 🏗️ Thoughtful Quality Gates: Use quality gates like Unit/E2E/IT reasonably - on-demand priced CI is expensive as hell.

If you focus on these points, you'll definitely be able to save a lot of money. Generally, these are the most common pitfalls that lead to unnecessary spending.

We'll go into some of them in more detail in the following sections.

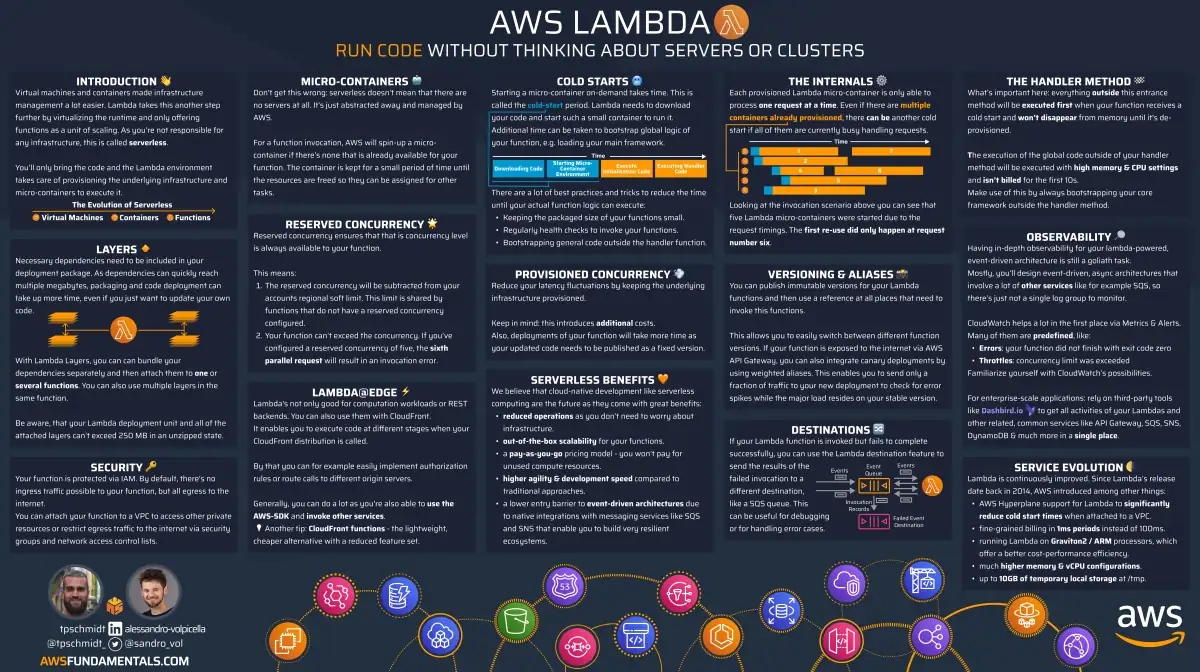

AWS Lambda on One Page (No Fluff)

Skip the 300-page docs. Our Lambda cheat sheet covers everything from cold starts to concurrency limits - the stuff we actually use daily.

HD quality, print-friendly. Stick it next to your desk.

Understanding Your AWS Bill

Before you can optimize costs, you need to understand where your money is actually going. AWS bills are somewhat opaque - they show you the total damage but rarely explain why you're paying what you're paying.

The main factors that determine your AWS spending drill down to three key areas:

- Cost allocation - Who is spending money on what?

- Cost tracking - How much is being spent?

- Cost control - How can we control costs?

We'll cover each of these in the following sections.

Cost Allocation

Cost allocation is the foundation of cost optimization. Without it, you're flying blind. You have no idea which team or service is burning through your budget.

Think of it like this: if you don't know which team is spending money on what, how can you hold anyone accountable? How can you identify the biggest cost drivers? How can you make informed decisions about where to focus your optimization efforts?

Cost allocation gives you visibility into the "who" and "what" behind every dollar spent. It transforms your AWS bill from a mysterious monthly charge into something you can actually understand.

This visibility naturally leads to the next step: using AWS Cost Explorer to dive deeper into your spending patterns!

The AWS Cost Explorer

AWS Cost Explorer is where you actually figure out what's costing you money. It takes your messy billing data and shows it in charts that make sense. You can filter by service, region, or whatever tags you've set up.

Cost Explorer grabs all your billing data and actually makes it readable. The regular billing section is basically useless - this is much better.

You can slice costs by service, region, account, or any custom tags you've created. It updates regularly so you're not looking at stale data.

Why does this matter? Because Cost Explorer turns your confusing bill into something you can actually use. Instead of staring at a massive CSV export (looking at you, billing section), you can spot trends, catch weird spikes, and see which services are eating your budget.

But the real power comes when you combine Cost Explorer with proper cost allocation tags. This combination gives you the granular visibility needed to make smart decisions!

Implementing Cost Allocation Tags

Cost allocation tags are the secret weapon for cost transparency. They're key-value pairs you attach to AWS resources that automatically propagate to your billing data.

Tags work by categorizing your resources along (hopefully!) meaningful dimensions.

You might tag resources by team (e.g., Team=Touchpoint), environment (e.g., Environment=Production), project (e.g., Project=UserPortal), or any other relevant category.

There's no predefined template that you must use.

Choose whatever works for you!

When properly implemented, tags give you the ability to slice and dice your costs in Cost Explorer.

Want to see how much the Touchpoint team spent on EC2 last month?

Filter by Team=Touchpoint and Service=EC2.

Need to understand production vs. development costs?

Group by Environment tag.

The environment example isn't the best example. If you're serious with AWS, you might consider creating dedicated accounts for each environment!

The key is consistency and automation.

Using Terraform's default tags (also work with other IaC tools like Pulumi or CDK) and automated tagging strategies ensures every resource is properly categorized without manual intervention.

With Terraform, you can even use the default_tags block on the provider level!

provider "aws" {

default_tags {

tags = {

Team = "Touchpoint"

Environment = "Production"

Project = "UserPortal"

}

}

}

This prevents the common problem of resources being created without proper tags, leaving you with "untagged" costs that are impossible to allocate.

Setting Up Budgets and Alerts

AWS Budgets provide automatic notifications when you cross spending thresholds, preventing budget surprises. It's quick to set up and it's definitely one of the first things you should do.

Budgets work by monitoring your actual spending against predefined thresholds. You can set up multiple budget types: cost budgets (tracking dollar amounts), usage budgets (tracking resource consumption), or RI utilization budgets (ensuring you're getting value from reserved capacity).

The real value comes from the alerting system. With Budgets, you'll get notified when you're either about to exceed your budget or you've already exceeded your budget. These alerts can be sent via email, SNS, or webhooks, allowing you to integrate them into your existing notification systems.

Setting up budgets is straightforward but requires some planning. Start with a monthly cost budget that covers your expected spending. Then add more granular budgets for specific services or teams that tend to have variable costs. Consider setting thresholds at 80% and 100% of your budget to give you early warning before you hit the limit.

You don't need to make budgets actionable. They are immediately useful to have a safe guard against unexpected spending.

But it's even better to use the alerts to trigger cost reviews, investigate spending spikes, or adjust your optimization strategies.

The Cost Widget in the AWS Console

On last thing that is super useful and doesn't require any setup: The AWS Console offers a nice widget to get an overview about your costs. It's active by default and you should probably not get rid of it!

This widget provides a quick snapshot of your current month's spending without navigating to Cost Explorer. It shows your month-to-date costs, projected monthly costs, and a simple breakdown by service. While it's not as detailed as Cost Explorer, it's perfect for daily cost awareness and catching spending anomalies early.

The widget automatically updates throughout the day, giving you real-time visibility into your spending. It's particularly useful for teams that want to maintain cost awareness without diving deep into detailed reports.

Disclaimer: if you're using AWS Organizations with multiple accounts and consolidated billing, you might not see the actual costs in the widget.

Resource Optimization Strategies

Let's talk about the most common cost optimization strategies.

We'll cover the following topics:

- Deleting Orphaned Resources 🗑️ - resources silently killing your budget

- Storage Cost Optimization 💾 - right-sizing your storage

- Compute Cost Optimization ⚡️ - right-sizing your compute

- Network Cost Reduction 🌐 - reducing data transfer costs

- Monitoring and Observability 🔍 - monitoring costs

Deleting Orphaned Resources 🗑️

Orphaned resources are the silent budget killers that accumulate over time. These are mostly manually created resources that nobody remembers making.

Your Django app from six months ago probably left behind an EBS volume. That test Lambda function? It's still running with a CloudWatch log group. Those RDS snapshots from "just in case"? They're costing you money every month.

Tagging helps you find these orphans. If you've been tagging properly, orphaned resources stick out like a sore thumb. No tags usually means nobody owns them.

Use AWS Resource Explorer to hunt these down. Sort by "last modified" and look for resources without proper tags. Then delete everything you don't recognize (after double-checking, obviously).

Storage Cost Optimization 💾

Storage is where your bill can spiral out of control fast. Not all your data needs the highest performance tier.

S3 Storage Classes: Most teams just dump everything in Standard storage. That works for frequently accessed files, but it's the most expensive option. If you're storing logs or backups that nobody looks at, move them to IA or Glacier. Intelligent-Tiering does this automatically and can save significant money.

EBS Volume Right-sizing: Your oversized volume that's barely using 10% of its space is wasting money. Downsize volumes based on actual usage patterns. Use CloudWatch to check actual usage before downsizing. EBS volumes are often provisioned with much more space than needed "for future growth," but this doubles the cost for space you aren't using. Regularly monitor disk usage and resize volumes down when possible.

EBS Snapshot Management: EBS snapshots accumulate costs quickly. Daily snapshots can easily cost more than the volume itself. Implement lifecycle policies to delete old snapshots automatically. Most teams only need 7-30 days of snapshots for recovery.

RDS Storage Right-sizing: Just like EBS volumes, RDS instances are often provisioned with excessive storage. If you're only using 50GB of a 500GB database, you're wasting money on the unused 90%. Monitor your actual storage usage in CloudWatch and downsize your RDS storage allocation accordingly.

Compute Cost Optimization ⚡️

Compute is where most teams blow their budget. You pick a c5.2xlarge because it "feels safer" than a t3.medium. But that larger instance can cost significantly more for the same workload.

Instance Right-sizing: Most applications run perfectly well on smaller instance types. Start small and monitor CPU and memory usage with CloudWatch. Scale up only when you actually hit performance limits. Choosing the largest instance type "just to be safe" is one of the most common and expensive mistakes.

Spot Instances for Batch Jobs: If your job can handle interruptions, Spot instances cost 60-80% less. Catch the two-minute interruption notice (SIGTERM) and checkpoint work so you can resume when the instance restarts. Your nightly data processing doesn't need guaranteed uptime—Spot will save you serious money.

Reserved Instances vs. Savings Plans: For any predictable, long-running workload, you should commit to a pricing model to get significant discounts compared to on-demand rates. The two main options are Reserved Instances and Savings Plans.

- Reserved Instances: These provide a large discount in exchange for a commitment to a specific instance family, region, and term (1 or 3 years). They are best when you have a highly stable and predictable workload.

- Savings Plans: These are more flexible. You commit to a certain amount of hourly spend for a 1 or 3-year term. This discount automatically applies to EC2, Fargate, and Lambda usage across different instance families and regions.

Here's a quick comparison:

| Feature | Reserved Instances (Standard) | Savings Plans (Compute) |

|---|---|---|

| Discount | Highest (up to 72%) | Very high (up to 66%) |

| Flexibility | Low (locked to instance family & region) | High (applies across families, regions, OS) |

| Applies To | EC2, RDS, Redshift, etc. (per service) | EC2, Fargate, Lambda |

| Best For | Extremely stable, unchanging workloads | Dynamic or evolving workloads |

For most use cases today, Savings Plans offer a better balance of savings and flexibility. Reserved Instances can still save money, but only if they are actually used. Teams often buy RIs for workloads that later change or get decommissioned, leaving them paying for capacity they don't need. Regularly review your RI utilization to avoid this pitfall.

Lambda Right-sizing and Optimization: Lambda costs are based on execution duration, memory allocation, and the number of requests. Optimizing it involves a few key areas:

- Memory Tuning: Lambda CPU is allocated proportional to memory. Finding the sweet spot is crucial. Too little memory slows down your function (increasing duration costs), while too much wastes money. Use tools like AWS Lambda Power Tuning to automatically find the most cost-effective memory configuration for your function.

- Architecture: Switch to ARM/Graviton2 instances where possible. They offer better price-performance over x86 for many workloads, resulting in lower costs for the same execution speed. This is especially true for Lambda functions that are not using the default x86 architecture.

- Provisioned Concurrency: If you have predictable, high-traffic functions, Provisioned Concurrency can be cheaper than letting them scale from zero every time. It keeps a set number of instances warm, but be careful—you pay for it even if it's not used.

Network Cost Reduction 🌐

Network costs are the sneaky fees that catch you off guard. Data transfer between regions can get expensive fast. Cross-account transfers add another layer of charges.

Data Transfer Optimization: The biggest hidden expense is moving data between regions or accounts. Use VPC Gateway Endpoints for DynamoDB and S3 to bypass NAT Gateway processing fees. While in-region traffic from EC2 to S3/DynamoDB is free, a NAT Gateway has a per-GB processing fee for any data passing through it. VPC endpoints keep traffic within the AWS network and avoid this extra charge.

Cross-Region Strategy: If you're running active-active multi-region setups, data transfer costs add up fast. Do you really need that complexity? Consider a single region with a disaster recovery fallback instead. AWS services are resilient - you might not need multi-region deployment.

Load Balancer Optimization: Application Load Balancers have both hourly charges and per-request fees. Running ALBs 24/7 can cost significantly more than you expect. For development environments, consider using ALBs only during business hours.

Elastic IP Cleanup: Unused Elastic IPs are a common waste. They cost money when not attached to running instances. Multiply by the number of developers who forgot to release IPs after testing. It adds up fast and is completely avoidable. Regularly audit your Elastic IPs and release any that aren't in use.

Monitoring and Observability 🔍

Monitoring costs are ironic - you spend money to track spending. CloudWatch can get expensive fast if you're not careful.

CloudWatch Log Management: Log ingestion costs are much higher than storage costs. The real killer is the ingestion volume. Most teams log way more than they need.

Set log retention to 7-30 days for most services. Use sampling rates of 1% or lower in production - you don't need every log line. The Power Tools repository for Lambda helps configure sampling correctly.

CloudWatch Metrics and Dashboards: High-resolution metrics provide data every second but cost significantly more than standard one-minute metrics. Only enable them for critical, short-term debugging.

CloudWatch dashboards also have associated costs. Beyond a small free tier, each dashboard has a monthly fee. Additionally, the API calls that populate the dashboards with metrics also add to your bill every time they are refreshed. Limit dashboard creation, control access, and reduce refresh rates to keep these costs in check. Avoid creating too many dashboards that are rarely used; each one can incur costs, and the metric queries they run on every refresh also add up.

Alarms: CloudWatch alarms add up quickly when you create them for every scenario. Teams create alarms for every possible situation and costs accumulate. Focus on actionable metrics and alarms only. Most alarms never trigger and provide no value, so regularly review and remove unnecessary ones.

Common Cost Pitfalls

AWS cost optimization isn't just about doing the right things. It's also about avoiding the common mistakes that drain your budget month after month. These pitfalls are so common that almost every team falls into them at some point.

Development vs. Production Separation

One of the biggest cost killers is mixing development and production workloads in the same account. Without proper separation, you end up paying production prices for development resources that run 24/7.

The classic scenario: your dev team spins up a few EC2 instances for testing. They forget to turn them off over the weekend. Suddenly you're paying for instances that nobody's using.

AWS Organizations solves this elegantly. Create separate accounts for each environment and use consolidated billing. This gives you:

- Clear cost separation between environments

- Different IAM policies for each stage

- Easier cleanup when projects end

- Better cost allocation and accountability

But even with separate accounts, you need guardrails. Set up strict budgets for development accounts. Use automated shutdown policies for non-production resources. Consider using AWS Service Catalog to limit what developers can provision.

The key insight: development environments should cost a fraction of production, not almost the same.

AWS Cost Optimization Tools

Beyond AWS's built-in tools, there's a bunch of community-driven options that make cost optimization less painful.

AWS FinOps Dashboard

One of my favorites is the AWS FinOps Dashboard. It gives you a clean CLI-based overview of your costs. No need to navigate through the AWS console to check what's burning money.

I've integrated it into Raycast (which replaces Spotlight on macOS) so I can check costs instantly. The author even built an MCP Server for it, so you can ask AI assistants about costs in natural language.

AWS Trusted Advisor

Trusted Advisor is AWS's built-in optimization tool. It scans your account and flags cost optimization opportunities. The free tier covers basic checks, but Business and Enterprise support unlock detailed recommendations.

It'll catch things like:

- Idle RDS instances

- Unattached EBS volumes

- Low-utilization EC2 instances

- Unused Elastic IPs

AWS Compute Optimizer

Compute Optimizer analyzes your EC2 usage patterns and suggests better instance types. It looks at CloudWatch metrics over 14 days and recommends rightsizing opportunities.

The catch? It only works if CloudWatch agent is installed and collecting detailed metrics. Most teams skip this step and miss out on optimization opportunities.

Building a Cost Culture

Recently, I've stumbled upon this discussion on Reddit about cost ownership.

The top answer is spot-on: cost ownership can't be centralized. It needs to be part of every team.

A team needs to own its stack from top to bottom. Including its costs.

Verticalized Team Ownership

Teams that own everything, including their costs, create better cost awareness and optimization. This is easy to say and hard to do.

In my recent experience, I've been on both edges: owning everything and not owning anything. Both approaches have their pros and cons.

In my opinion, the fully-verticalized approach is the best. It will automatically create a better understanding of cost structures, as you're confronted with your stack's cost regularly.

Cost Reviews and Accountability

To make this happen, you need (automated) processes that make costs very transparent. This means: regular cost reviews that don't suck and hold teams accountable for their spending.

Luckily, this is rather easy to do! The AWS Cost API offers everything you need to build a nice and slim cost review process.

Let's make a simple example:

aws ce get-cost-and-usage \

--time-period Start=2025-08-01,End=2025-09-01 \

--granularity MONTHLY \

--metrics BlendedCost \

--group-by Type=DIMENSION,Key=SERVICE \

--query 'ResultsByTime[].Groups[].[Keys[0], Metrics.BlendedCost.Amount]' \

--output table \

--no-cli-pager

The output could look like this:

----------------------------------------------------------

| GetCostAndUsage |

+--------------------------------------+-----------------+

| Amazon EC2 | 156.42 |

| Amazon S3 | 89.73 |

| Amazon RDS | 234.18 |

| AWS Lambda | 12.45 |

| Amazon CloudFront | 45.67 |

| Amazon DynamoDB | 67.89 |

| AmazonCloudWatch | 23.56 |

+--------------------------------------+-----------------+Put this into an automated CRON job (e.g. via Lambda or CodeBuild) and push it to your team once a week via email, Teams or Slack. This is already a neat solution and a great start!

Conclusion

AWS cost optimization doesn't have to be overwhelming. Start with the low-hanging fruit: delete orphaned resources, right-size your instances, and set up basic budgets.

The biggest wins usually come from:

- Proper cost allocation with tags

- Regular cleanup of unused resources

- Right-sizing compute and storage

- Setting up automated cost monitoring

Don't try to optimize everything at once. Pick one area, fix it, then move to the next. Your future self (and your budget) will thank you.

The key is making cost optimization part of your regular workflow, not a quarterly panic session when the bill arrives.

Next-Steps Checklist

Maybe this is a good starting point for you:

- [ ] Enable AWS Budgets with 80 % / 100 % alerts

- [ ] Tag 100% of new resources (Team, Environment, Project)

- [ ] Use AWS Allocation Tags

- [ ] Schedule a weekly orphaned-resource cleanup

- [ ] Run Compute Optimizer and apply top 3 right-size actions

- [ ] Review cost dashboard every Monday

- [ ] Go through the TL;DR list at the beginning of this article

Good luck! 🍀

Related Posts

Similar content found using AWS S3 Vectors semantic search