Table of Contents

Jump to a section

Understand your Costs

I know this sounds obvious. But this is very much needed to be able to improve anything. Understanding costs in AWS means that you can make use of the Cost Explorer in an efficient way. That also means you need to set up a few things like:

- cost allocation tags

- enable granular costs to see daily costs

- set up reports

Once you've got these reports, you can drill down your costs. What I like to do is to drill down on two things:

- Domain- or Stack-Level (if you're using CDK or CloudFormation)

- Function-Level

You can get both of these by incorporating AWS Tags.

I use my own Shopify app as an example here. The Shopify app (FraudFalcon) analyzes orders in Shopify for fraud. It is a good example because it runs at quite a scale (> 1 Mio orders a month) and it is built completely serverless in SST.

For this app, we've set up some cost allocation tags.

For example, the tag function:name.

With this tag, we can figure out the most expensive Lambda functions.

If you're using CDK, I suggest you make use of CDK aspects for tagging your resources.

Since we are on SST, we can simply do it with this code in the sst.config.ts:

$transform(sst.aws.Function, (args, _opts, name) => {

args.tags ??= {

'function:name': `${$app.name}-${$app.stage}-${name}`,

};

});

And all your Lambda functions have their function names as a tag.

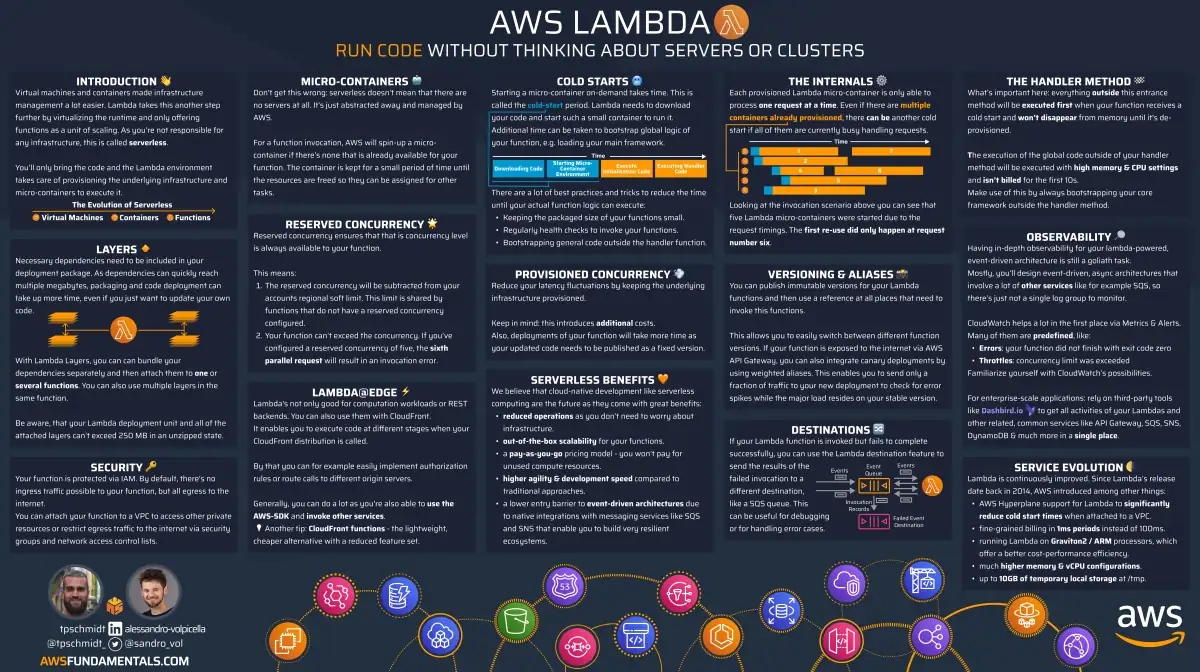

AWS Lambda on One Page (No Fluff)

Skip the 300-page docs. Our Lambda cheat sheet covers everything from cold starts to concurrency limits - the stuff we actually use daily.

HD quality, print-friendly. Stick it next to your desk.

Reduce Memory

Lambda's billing model is based on GB per milliseconds. GB is measured in memory. There are two dimensions you can optimize on:

- Allocated memory

- Time the function runs

The first step of optimizing the costs is to look at the allocated memory and how much of that is actually used.

Each Lambda function prints out a REPORT log at the end of the invocation.

REPORT RequestId: a218ffe7-ed4f-5b08-9da3-9f8762aa74cb Duration: 1655.15 ms Billed Duration: 1656 ms Memory Size: 1024 MB Max Memory Used: 312 MB

Use this in combination with a CloudWatch Logs Insights query to understand how much memory is used. I always use a query like this:

filter @type = "REPORT"

| stats

count(@type) as invocationsCount,

avg(@maxMemoryUsed) /1000 /1000 as avgMemoryUsed,

pct(@maxMemoryUsed, 95)/1000 /1000 as p95MemoryUsed,

pct(@maxMemoryUsed, 99)/1000 /1000 as p99MemoryUsed,

max(@maxMemoryUsed)/1000 /1000 as maxMemoryUsed,

stddev(@maxMemoryUsed)/1000 /1000 as stdDevMemoryUsed,

max(@memorySize)/1000 /1000 as allocatedMemory,

concat(ceil((avg(@maxMemoryUsed)/max(@memorySize))*100), "%") as percentageMemoryUsed

by bin(1min) as timeFrame

This query shows you:

- max memory used

- allocated memory

- how much memory is actually being used on average

The easiest find is if max memory used is way below the allocated memory. You can't imagine how often I saved about 20% of costs in several AWS accounts by just optimizing the memory with this step.

Here you can see that our Lambda function is quite overprovisioned. If max memory is not really below the allocated memory, you need to dig a bit deeper. What also often happens is that your application has very noisy neighbors.

For our app, this happens a lot. The majority of the customers have just a few orders a day. But 2 customers have over 10,000 a day. Because of that, we need to overprovision our Lambda function quite a bit just to handle the peaks.

The more projects I've seen (especially in the B2B space), the more I realized that this is very common. If that is your case, you need to find more creative ways of optimizing your memory.

One important note here:

Memory in Lambda is not just memory. Memory also defines the assigned compute. If you reduce the memory a lot, your Lambda function will also have less CPU available. For some workloads, this could mean the time of execution goes through the roof, which again increases costs. There used to be a good overview of a table of how much memory meant how much compute. As of today, I couldn't find this information anymore. Here is an overview from the great blog of the guys at fourtheorem.com.

There is an amazing tool called Lambda Powertuning from Alex Casalboni. You can test your Lambda functions with a step function and various memory sizes. It will then give you a graph and show you the optimal setting. In real life (especially in client projects with small dedicated time), this is not always possible. But if you have the chance, I would definitely suggest doing that.

Reduce timeouts

The second dimension you can optimize your Lambda function on is time. The longer your function runs, the more it costs. It is as simple as that.

Developers tend to go with defaults at the beginning and stick to those defaults forever. Often these defaults are 15 minutes timeout and 10 GB of memory. This is not a good starting point. Lambda costs can escalate very, very quickly. If you have a running Lambda function, I'd start by looking at their statistics with metrics or a Logs Insights query.

I love to use Logs Insights, so let's look at an example.

filter @type = "REPORT"

| stats

count(@type) as invocationsCount,

min(@duration) /1000 as minDuration,

avg(@duration) /1000 as avgDuration,

pct(@duration, 95) /1000 as p95Duration,

pct(@duration, 99) /1000 as p99Duration,

max(@duration) /1000 as maxDuration,

stddev(@duration) /1000 as stdDevDuration

by bin(1h) as timeFrame

Here the output looks something like that. We can see that the max duration is quite okay. Our timeout of this function, for example, is 15 minutes. And unfortunately, there are cases where these 15 minutes are fully utilized.

If we zoom out a bit and look at the past week, we can see that we even reached a timeout here:

One thing to analyze here is to dig deeper, understand what is happening, and reduce the timeout. Reducing the timeout itself won't decrease the costs, of course. But it will make outliers much more visible. One prerequisite for that is to have proper monitoring. Without monitoring and alerts, I wouldn't do this change.

Change to ARM Architecture

Your Lambda function can run in x86 and ARM architecture. For new projects, our Lambda functions are always running on ARM by default. There needs to be a reason why x86 should be used. ARM architecture costs about 20% less compared to x86.

To cite AWS:

AWS Lambda functions running on Graviton2, using an Arm-based processor architecture designed by AWS, deliver up to 34% better price performance compared to functions running on x86 processors.

You get these savings almost for free. I say almost because we all know that there is no free lunch. There are a few things that you need to consider when switching to ARM.

I faced these challenges just recently:

- Check your dependencies + layers. Are they all supported in ARM? Looking at you pydantic v1 🙄

- Can you bundle ARM? I once had the issue that everything worked in my sandbox because I've bundled everything on my Mac, which is also ARM. But my CodePipeline bundled everything in x86. That was quite a hard one to figure out.

- Is your Lambda size of 250 MB enough? ARM dependencies are typically larger compared to x86. ARM often installs binaries for both x86 and ARM. When you have a Python Lambda with numpy, pandas, pydantic, and a few other dependencies, it can happen that you reach the limit with ARM, but not with x86.

Overall, this can be one of the easiest or one of the hardest changes. Definitely test your workloads and don't just blindly switch. If you start a new project, take ARM as your default.

Use your Lambda less

The last point is somehow obvious and somehow not. If you use your Lambda function fewer times, it will result in fewer costs.

But what does that mean exactly? On the one side, we could argue to go more "functionless." Meaning use more direct integrations like VTL (API GW → DDB), Step Functions, Eventbridge Pipes, etc.

So far, the development experience of those is not really good, so I always jump back to glue Lambdas, and I'm fine with that. I am sure that this will get better over time. But at the moment, I don't see the adoption of that happening. So, what else can we do?

Use Batching and Caching. Another common practice of using fewer Lambdas is by optimizing things like batching and caching.

Yan Cui loves to say:

"caching is like a cheat code for distributed systems".

And that is true. For me, the same applies with batching in regards to AWS costs. If you have an SQS queue that is only receiving one message, more Lambda functions need to be invoked.

Typically, this means that you have more invocation time in sum. If you let one Lambda function handle multiple batches and then maybe even parallelize these batches, you can reduce your Lambda functions by a lot.

Let's look at an example. In the Shopify app, we get orders via EventBridge events into a Lambda function. That means we need to check every incoming order.

We started doing this by subscribing to an event and invoking one Lambda function for each event. This resulted in huge spikes of 700 Lambdas at the same time (and guess what - the downstream system said goodbye).

We had costs of almost $280/month.

By simply introducing a queue, batching the calls into 40 batches (memory is the constraint here), and limiting the Lambda concurrency, we reduced the costs to about $3.

Full props to Jannik Wempe.

There are many things you can batch:

- Event source mapping of SQS to your Lambda function

- Data within S3 files (think of arrays in a JSON file)

- Sending messages to SQS or events to EventBridge

And you can even further improve things by parallelizing these batches within your Lambda functions! There are many opportunities out there.

The same thing can apply to caching. You can start caching outside of the Lambda context.

For example, if we load rules from our SQL DB, we save all rules for 5 minutes in the execution environment. Fewer network hops mean less execution time, which means fewer costs.

You could cache on the end user level on the edge with CloudFront. This would mean the request wouldn't even ever hit your backend. You can also cache on the database level so that it returns faster and maybe closer to your Lambda function.

In my opinion, this one is the hardest to implement. But it can also have lots of benefits in doing that. Often not just for your costs but also for your business logic and customers.

Summary

In summary, I would do these points in exactly that order. Yes, ARM could be argued to do first. But I saw enough cases already where it is not as straightforward as you would have thought. Real-world architectures are typically much more complicated than the Level 100 talk on re:Invent suggests 😜

I hope that helps and let's save Lambda costs together!

Related Posts

Similar content found using AWS S3 Vectors semantic search