Table of Contents

Jump to a section

Running untrusted, AI-generated code in production presents a tricky problem. Any developer building AI applications that generate code on the fly faces this challenge. You need strong security to prevent malicious or resource-heavy code from breaking your system, plus the ability to scale when workloads spike unpredictably.

The risks come from multiple sources. Malicious code can emerge from deliberate prompt engineering, accidental exploits, or random LLM outputs. More often, inefficient code consumes excessive resources by downloading massive datasets, creating infinite loops, or running expensive operations. No malicious intent required, just inefficiency that creates scaling problems.

This case study examines how Quesma, the makers of benchmarks and evals for production-ready AI agents, solved this challenge by building a secure, isolated execution environment on AWS Lambda.

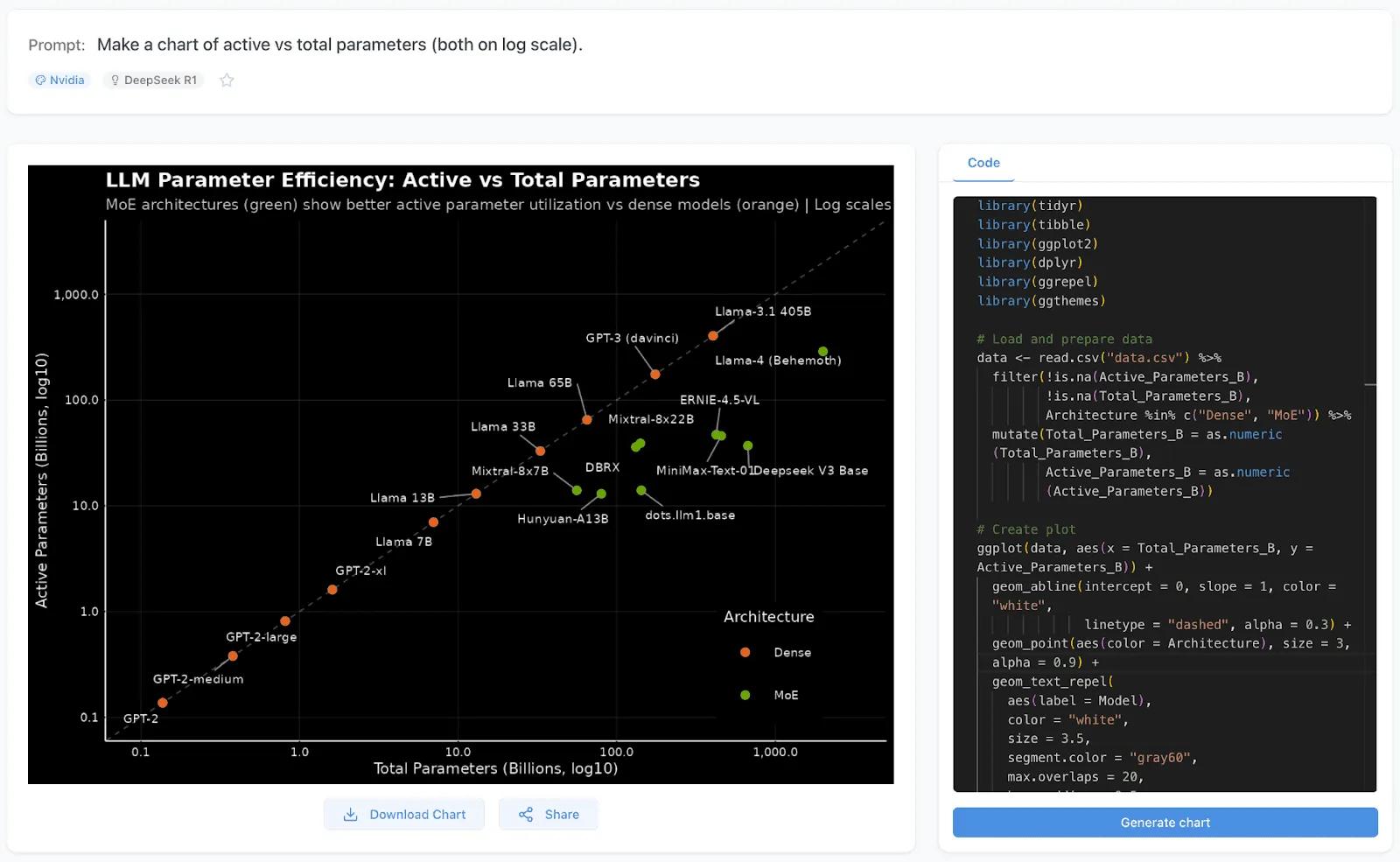

Quesma's workflow: user prompts are converted to R code, which then generates interactive dashboards and visualizations

Quesma's workflow: user prompts are converted to R code, which then generates interactive dashboards and visualizations

The Initial Approach: WebAssembly in the Browser

Our first instinct was to push the security risk to users' browsers using WebAssembly. This approach had clear advantages: zero infrastructure costs, natural scaling, and a built-in safety mechanism where users could close their tab if something went wrong.

We implemented this using WebR, which runs R code directly in the browser and supports the ggplot2 library essential for creating publication-quality charts. The solution worked technically, but revealed three critical limitations:

-

Missing dependencies: While WebR had a solid package collection, the gaps between "almost everything" and "everything" caused problems in production. Standard practices like pinning packages to specific versions required workarounds that didn't work properly in WebAssembly.

-

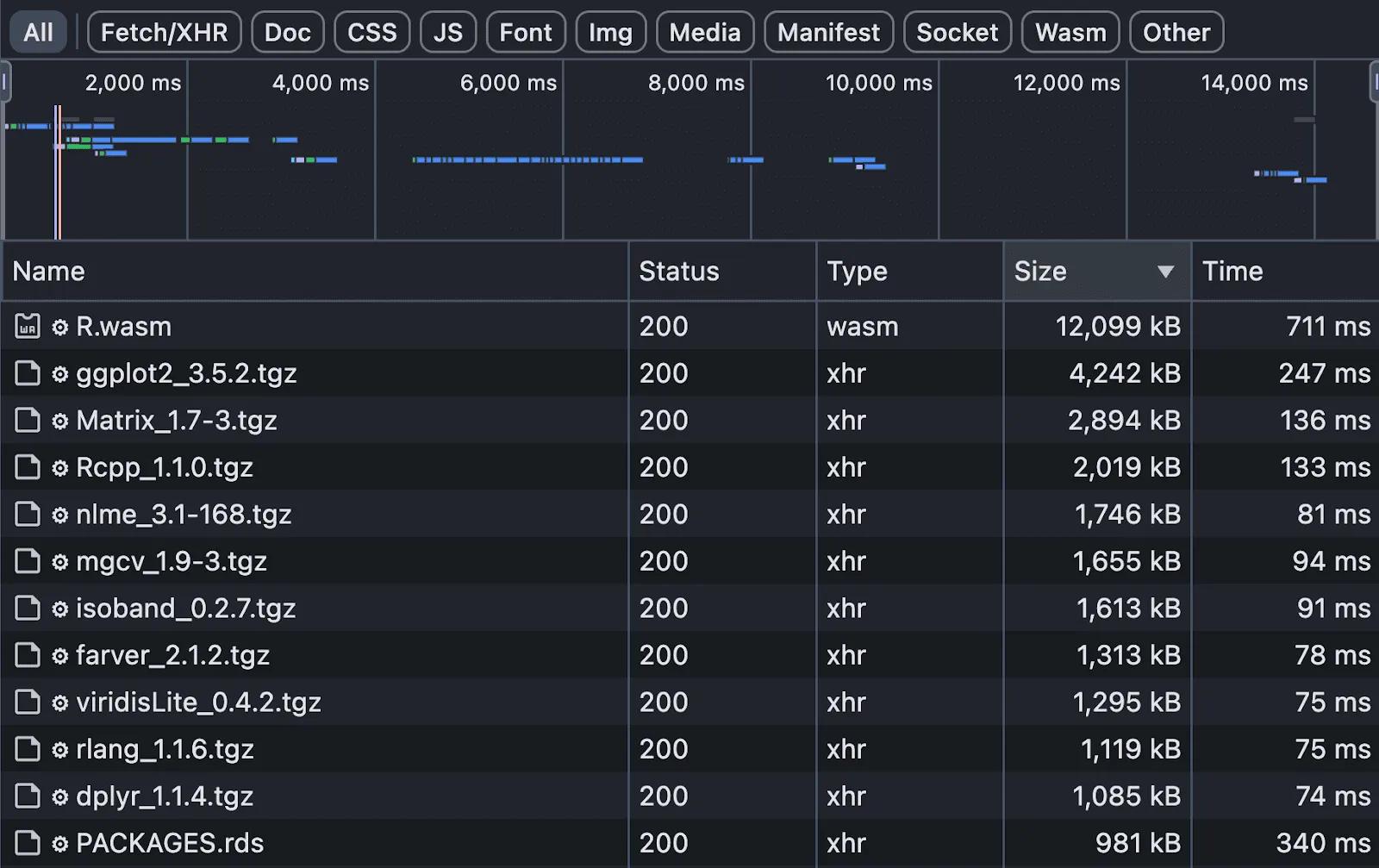

Performance bottlenecks: The base WebR library weighed 12MB, with the full tidyverse stack reaching 92MB. Even an optimized setup required 39MB of downloads. This created unusable experiences on slow connections—a reality we discovered at a conference where WiFi congestion made the application impractical.

Browser network tab revealing the performance problem: WebR and tidyverse libraries requiring massive downloads that crippled the user experience

Browser network tab revealing the performance problem: WebR and tidyverse libraries requiring massive downloads that crippled the user experience

- Complex data flows: A real application needs to save results, analyze outputs, and allow the LLM to investigate data before generating visualizations. This created complicated back-and-forth communication between the frontend and backend, becoming a major bottleneck for agentic AI workflows.

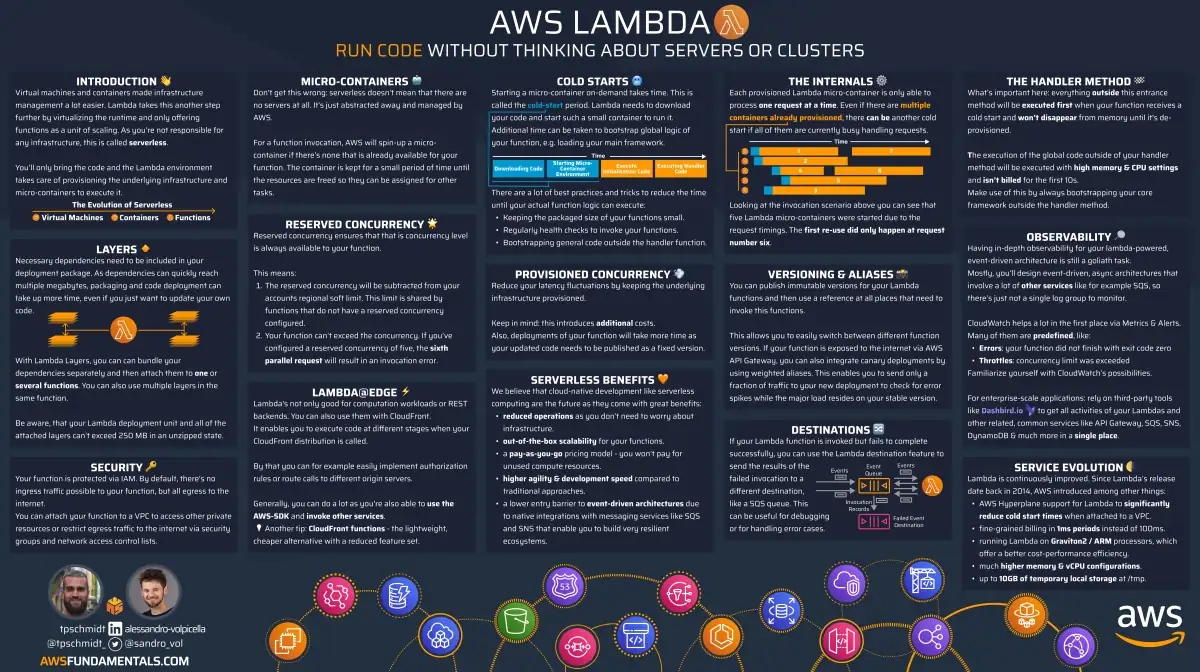

AWS Lambda on One Page (No Fluff)

Skip the 300-page docs. Our Lambda cheat sheet covers everything from cold starts to concurrency limits - the stuff we actually use daily.

HD quality, print-friendly. Stick it next to your desk.

Lambda and Docker in an Isolated VPC

Moving code execution to AWS Lambda solved our main problems and gave us strong security. The solution uses three AWS services working together.

Containerized Execution Environment

We built a custom Docker container image with a specific version of R, bundling all necessary dependencies, libraries, and system fonts. This eliminated the dependency gaps from WebAssembly and ensured a consistent execution environment. Lambda's configurable timeout settings provide a simple guardrail: with a 30-second hard timeout and average chart generation taking 10 seconds, legitimate queries have buffer while no process monopolizes resources indefinitely.

Moving the heavy work server-side simplified our frontend significantly. The data flow became much cleaner: user sends prompt and data to backend, LLM generates R code, Lambda executes code with retry capability, final chart saves to S3, and backend updates the application.

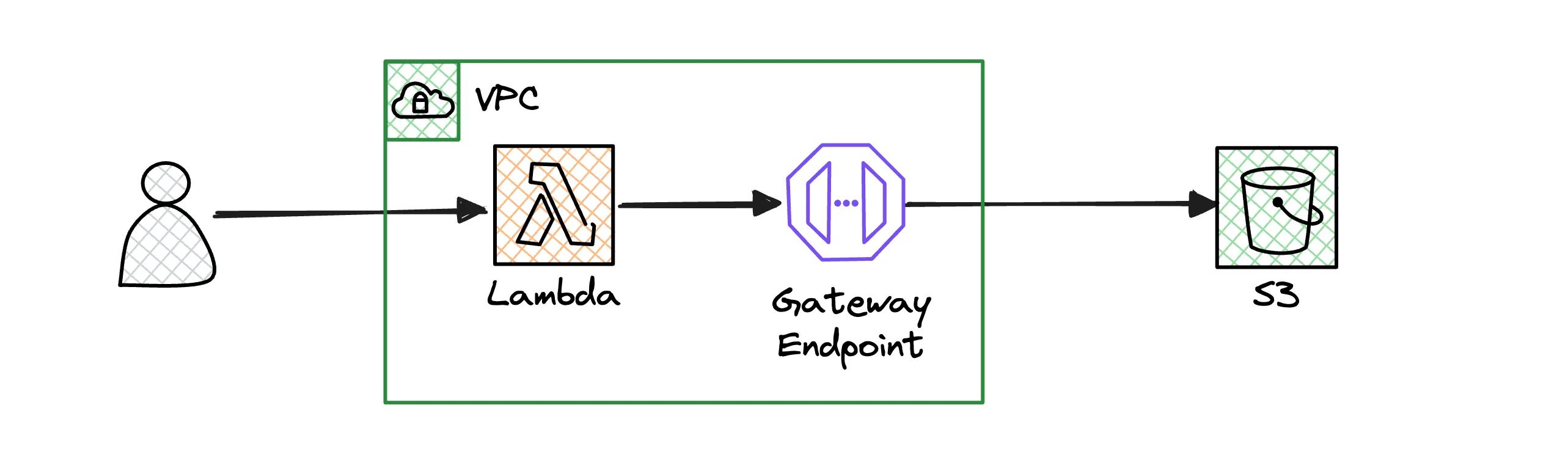

Network Isolation with VPC

Security required complete network isolation to eliminate entire categories of threats. Our Lambda functions run inside a Virtual Private Cloud with no access to the public internet. Rather than routing S3 traffic through the public internet, we configured a VPC gateway endpoint to S3. This keeps all data off the public web entirely while maintaining performance.

This creates a real sandbox where code runs in complete isolation, accessing only the resources we explicitly provide through private network connections.

Key Takeaways: Real-World AWS Insights

Here's what we learned from running this system in production.

The Lambda Memory-CPU Quirk

On AWS Lambda, CPU power scales directly with memory allocation. Our CPU-bound R code needed substantial processing power but minimal memory. We had to provision 2048MB of RAM to obtain sufficient CPU, even though actual memory usage never exceeded 205MB—paying for roughly 10x more memory than needed.

The lesson: For CPU-intensive tasks with modest memory needs, you'll necessarily over-provision RAM. Factor this into cost calculations early and consider alternatives like ECS or EKS for sustained, CPU-heavy workloads.

The Hybrid Development Workflow

We adopted a hybrid approach: running the main web service locally while connecting to a shared Lambda function in a dedicated AWS development environment. This provides fast iteration on application logic while testing Lambda against actual AWS infrastructure, eliminating environment mismatches.

The lesson: Don't fight against the cloud's architecture. Build development workflows that treat Lambda as a cloud service rather than attempting perfect local replication.

What We Learned About Building Secure Sandboxes on AWS

Moving from WebAssembly to AWS Lambda taught us lessons about architecture, security, and cloud pricing. Here are the key principles we discovered while building a production sandbox for untrusted code.

-

Choose server-side execution for complex data flows: Browser-based execution breaks down when applications require back-and-forth communication with backend services. AI agents that need to query, analyze, and iterate on data fit this pattern perfectly.

-

VPC isolation is straightforward and effective: A Lambda function in a VPC without internet access, combined with gateway endpoints for necessary AWS services, provides robust isolation with minimal configuration.

-

Docker on Lambda solves dependency challenges: Lambda's container image support provides precise control over runtime environments and dependencies, eliminating "works on my machine" problems.

-

Understand pricing model mismatches early: Lambda's memory-CPU coupling means CPU-bound workloads necessarily over-provision memory. Identify these mismatches during architecture design rather than in production bills.

Conclusion

WebAssembly works well for isolated, compute-heavy tasks that don't need backend communication. But Quesma's chart generation required back-and-forth communication with backend services for data analysis and iteration. Different bottlenecks meant we needed a different solution.

Lambda proved to be the perfect fit for our use case. It handles unpredictable workloads automatically, provides built-in isolation, and eliminates server management overhead. The combination of Docker containers, VPC isolation, and automatic scaling gave us both security and operational simplicity.

Choose your execution environment based on your data flow patterns. For complex workflows requiring backend integration, server-side execution with proper sandboxing is often the better path.

Related Posts

Similar content found using AWS S3 Vectors semantic search